My website is unresponsive, but system load is zero! What's going on!

2007, November 30th 3:19 PMThis is what I woke up to four days ago.

By now, anyone reading this entry – and thousands of people who aren't reading this entry – have probably seen my Double-Boiling Your Hard Drive article. I wrote that one up because I thought it was fascinating, and submitted it to Slashdot, Digg, and Reddit just for fun. I got a small pile of hits off those submissions, but not many, and I went to bed assuming those posts would simply fade into oblivion.

While I was sleeping, a friend of mine made a Reddit entry that, to say the least, did quite a bit better. I woke up and my site was so hammered that even I couldn't access it.

Since I spent a lot of time trying to figure out what the issue was and (once again) ended up finding a solution myself, I'll explain the problem and eventual solution here along with a lot of technical garbage that most people probably don't care about. I am, however, going to try to make it understandable to non-geeks – so if you want a bit of a view into how webservers and performance works, read along.

The entire problem stems from the basic method that you use to do communications across the Internet. Here's the simplest way you can write an Internet server of any kind:

Repeat forever: Wait for a connection Send and receive data Close the connection

This seems reasonable at first glance, but there's one huge issue – you can only process one connection at a time. If Biff connects from a modem, and it takes me 20 seconds to send a webpage to him, that means there's 20 seconds during which nobody else can communicate with the server. Can't download, can't even connect. Worse than that, even the fastest browsers are going to take a few seconds to load a large complex webpage like this one, and that would kill performance completely.

There are many many solutions to this. The most common web server, Apache, seems to have two main modes you can run it in – prefork and worker – which implement two of those solutions.

First off, prefork. In prefork mode, Apache forks the webserver process into a larger number of processes, which means that there are actually, say, ten copies of Apache running simultaneously. This isn't nearly as bad as it sounds. Any modern operating system is going to realize that most of the data Apache loads, like the program itself and likely all of its configuration data, doesn't change – and if it doesn't change, there's no reason to make one copy of it for each Apache. It's shared among all the processes. If it's shared, you only need a single copy. Low memory usage and everyone is happy.

Unfortunately, Apache isn't the only thing that goes into a standard webserver now. The journal software that I'm using on this site – WordPress – is written in a scripting language called PHP. Scripting languages need an interpreter to work, and so Apache runs the PHP interpreter – one copy per process. The interpreter code itself gets shared in the same way Apache does, but all the temporary structures it builds and all of its working space isn't shared.

It actually gets worse from here. As part of their normal function, most programs allocate and deallocate memory. If they need to load in a big file, they allocate a lot of data, then deallocate it when closing the file. When they allocate, they first check to see if there's any "spare space" available that the program has already received but isn't currently using. If not, they request more space from the OS. However, most programs will not return space to the OS at any point. They'll just return that unused space to its local pool, the aforementioned "spare space". This means the OS can't know exactly what the program is or isn't using at any one time. The process eventually stabilizes at whatever its worst-case is – if you have one horrible page that takes eight megs of RAM just on its own, and you have your program load pages randomly, your program is going to reach eight megs and then sit on that eight megs forever – even after it's done dealing with that nasty page.

As a result of this, if you're running ten Apache processes, you will eventually be using ten times the maximum amount of RAM that Apache+PHP could use on any one page on your site. That's painful.

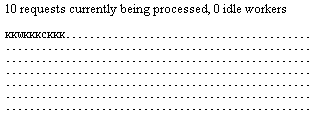

In my case, this site is running on a virtual server with 256 megs of RAM. My average Apache process was eating about 12 megs, and MySQL was consuming another 50 megs, and the OS was taking another chunk. I couldn't get more than ten processes running without absolutely killing my server. (When MySQL crashes due to running out of RAM I really don't care that I can serve error pages 50% faster.)

In my case, this site is running on a virtual server with 256 megs of RAM. My average Apache process was eating about 12 megs, and MySQL was consuming another 50 megs, and the OS was taking another chunk. I couldn't get more than ten processes running without absolutely killing my server. (When MySQL crashes due to running out of RAM I really don't care that I can serve error pages 50% faster.)

And this is why, despite the fact that the CPU load was negligible, the site was still completely inaccessible. I had plenty of CPU to generate and send more pages with. I was swimming in spare CPU. But no matter how much CPU I had, I couldn't possibly service more than ten users simultaneously.

Now, back to those Apache modes! What I've just described up there – with one process per connection – is "prefork" mode. There's another newer mode called "worker" mode.

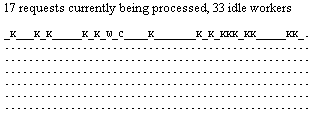

In worker mode, Apache spawns one thread per connection. You can think of threads as sub-processes – they run inside the same process and all have access to the same memory and data.

Remember all that stuff I wrote about programs returning data to the OS? They don't return data to the OS – but they'll gladly return data to the process. Every copy of PHP that gets run can reclaim the exact same memory and re-use it, even while its siblings are sending and receiving data.

By default, worker mode spawns 25 threads per process, with multiple processes if it needs more connections than that. Under heavy load, each thread spends most of its time sending or receiving data (Biff's crummy modem again). In reality only one or two threads will actually be running PHP at a time – so the memory usage for this single process is, at most, twice that of the prefork processes. But we can now handle 25 times as many connections.

By default, worker mode spawns 25 threads per process, with multiple processes if it needs more connections than that. Under heavy load, each thread spends most of its time sending or receiving data (Biff's crummy modem again). In reality only one or two threads will actually be running PHP at a time – so the memory usage for this single process is, at most, twice that of the prefork processes. But we can now handle 25 times as many connections.

I finally got this mode up and running, and suddenly my site was not just usable, but 100% responsive. No slowdown whatsoever. However, you'll notice I haven't given any kind of detailed instructions on making this work, and there's a good reason for it. This is a terrible long-term solution and I was crossing my fingers the entire time, hoping it wouldn't melt down.

Here's the issue with threading. Imagine you have a blind cook in a kitchen. (I'm avoiding the classic car analogy.) He can cook easily, because he knows where everything is, and he knows where he may have moved things – he can take a pot down, put it on the stove, chop an onion, toss it in the pot, and the pot is still there. He's blind, but it doesn't matter, because nobody is mucking about with his kitchen besides him. No problems.

Now imagine that we have a huge industrial kitchen, with fifty blind cooks, all sharing the same stovetops and equipment. The cooks would get pots mixed up, interfere in each other's recipes, and there would probably be a lot of fingers lost. Threading, unless you're careful, can be equally catastrophic – all the threads work in the same memory space and they can easily stomp all over each other's data.

PHP, in theory, is threadsafe. Some of the libraries that PHP calls are threadsafe. Not all of them. It worked, for a day – but I wouldn't want to rely on it long-term.

There is a solution to this. It's just a horrible bitch.

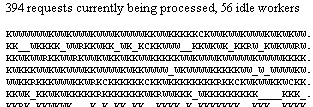

There's a module called FastCGI that you can use with Apache in worker mode. FastCGI is threadsafe. FastCGI can be set up to call a specially-built version of PHP, and do so in multiple processes so PHP doesn't even have to be threadsafe. To make things even better, FastCGI keeps a small "pool" of PHPs – perhaps three or five – but nowhere near one per connection. This does mean that you can only have five PHP sessions running at once, but remember that PHP processing is fast on our server! Apache is smart enough to read all the input, do all the PHP processing quickly in memory, and then sit there waiting for Biff's modem to acknowledge all the data. Five PHP instances can easily service a few dozen connections.

Unfortunately, Debian Linux (and likely others) doesn't have particularly good native support for this. All the modules do exist in one form or another (apache2-mpm-worker, libapache2-mod-fastcgi, php5-cgi) but just installing them doesn't do the trick – you need to hook them together. Luckily, the FastCGI FAQ does mention everything you'd need for this (look under "config"). It's annoying to set up, but it's not really difficult – just irritating.

FastCGI on its own doesn't solve all the problems. WordPress is, actually, a CPU-hungry beast. Five PHP instances might be able to service a few dozen connections, but not hundreds – WordPress pages involve a lot of database queries and a lot of work. But solving this issue can be accomplished nicely by installing WP Supercache – it will cache pages as they're displayed and it hugely decreases CPU usage, meaning that those same five PHP instances can now serve well over a hundred.

Before these changes, my server couldn't handle more than ten simultaneous connections. I've used website stresstesting software since – I've managed up to 400, and the server doesn't even break a sweat. I can't do higher because my connection starts dying horribly.

Before these changes, my server couldn't handle more than ten simultaneous connections. I've used website stresstesting software since – I've managed up to 400, and the server doesn't even break a sweat. I can't do higher because my connection starts dying horribly.

There's no real excuse for any modern server to have trouble with this sort of load, unless it's doing extremely heavy noncacheable processing or getting hundreds of simultaneous connections. Computers are fast, and getting faster all the time – at this point I'd love to see this site get Digged or even Slashdotted, because I'm truly curious what it could stand up to.

I'm hoping that someone with this same problem will find this page and be able to fix it quickly. It's not that hard – it's just kind of annoying.

Robin Battey

2007, November 30th 8:41 PMYour description of the prefork mode isn't correct. There are two details that are very important for your scenario, in both prefork and worker mpms:

1. There is a *single* control process that accepts the connection and hands it off to a spare server.

2. You do *not* keep the processes around forever. There is a MaxRequestsPerChild directive (default is 10,000) that determines when a client is killed and replaced. This means that you *don't* stabilize on the worst-case scenario. (You might want to reduce your number to something like 100, though.)

Also, in worker mode, you still have a number of processes. I think there's typically a max of 25 threads per process by default, but it's configurable in any case.

You're right that php isn't threadsafe yet, so using the worker mpm with mod_php is a bad idea. However, you can use the worker mpm with a cgi interface to php (there's a *really* easy way to set this up in debian, contact me if you want more details), that gets around all php threading problems by invoking a separate php interpreter process for each php request. This is what I use. FastCGI is even better, of course, because it re-uses the php process, and skips the "load all modules" step with each, but it's a little more work to set up.

There's also a few caching options in apache2 itself. This could alleviate a lot of your CPU problems, but it's just an RFC2616 (http protocol) compliant cache, and I don't know how well it plays with wordpress. I would *definitely* move your mysql server to a different server than your webserver, though. I use this in front of tomcat applications, to cache all static or near-static content.

Cheers!

-robin

Zorba

2007, November 30th 9:03 PMI glossed over some of the details for convenience's sake. The existence of a central coordination process really doesn't matter for this application since it wasn't any sort of a problem (is it ever?) and the various forked processes were reaching their standard memory size pretty much instantly.

Also, you'll note that I did say worker mode was 25 threads per process with extra processes for more than that.

I avoided the whole pure-CGI-to-PHP thing just due to speed, and the fact that it's not really any harder to configure FastCGI (as well as having better and more standard support.) No reason in mentioning a bad solution. And I didn't bother with apache2 caches for exactly that reason – there's no way Apache can know when things have changed, so I might as well do it the right way first.

Considering that a lot of this was "let's see if I can make it run quickly with the hardware I have", I didn't really think a separate server was useful. After all, why use two servers when you can just have one? It'd be cheaper for me to just buy twice as much RAM for that one server, anyway, and it's still nowhere near CPU bound.

Anonymous

2007, December 1st 4:56 PMWell, there *is* a standardized way for apache to know when something has changed, but you have to add caching headers to your php output, which can be bothersome at first. In the end, it makes a *lot* of things much faster, though, because the client will cache it as well (IE and Firefox do, anyway).